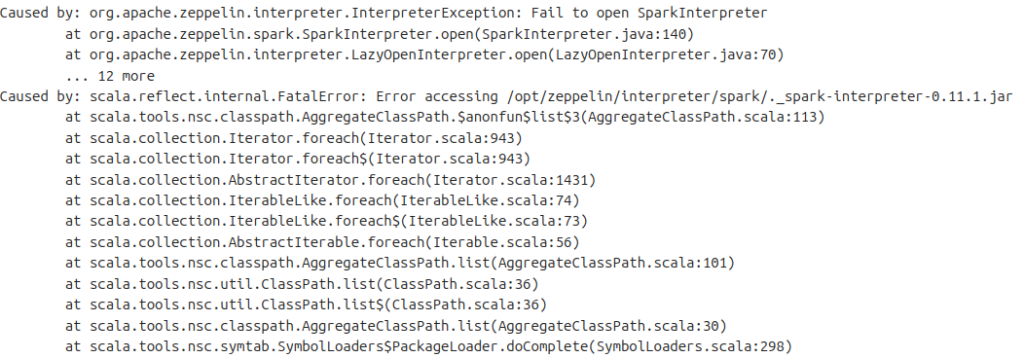

To install the latest Zeppelin (I recommend 0.11.0, since 0.11.1 have bugs) and Spark 3.5.1, we need to do several steps

- Required packages

sudo apt-get install openjdk-11-jdk build-essential Or you can install using previous java version if encountered with error

sudo apt-get install openjdk-8-jdk-headless2. Spark installation

wget -c https://dlcdn.apache.org/spark/spark-3.5.1/spark-3.5.1-bin-hadoop3.tgz

sudo tar -xvvf spark-3.5.1-bin-hadoop3.tgz -C /opt/

sudo mv /opt/spark-3.5.1-bin-hadoop3 /opt/spark3. Zeppelin installation

wget -c https://downloads.apache.org/zeppelin/zeppelin-0.11.0/zeppelin-0.11.0-bin-all.tgz

sudo tar -xvvf zeppelin-0.11.0-bin-all.tgz -C /opt/

sudo mv /opt/zeppelin-0.11.0-bin-all /opt/zeppelin4. Install MambaForge

wget -c https://github.com/conda-forge/miniforge/releases/download/24.1.2-0/Mambaforge-24.1.2-0-Linux-x86_64.sh

bash Mambaforge-24.1.2-0-Linux-x86_64.sh -b -p ~/anaconda3

~/anaconda3/bin/mamba init

source ~/.bashrc

mamba create -n test python=3.105. Run and Configure Zeppelin

sudo ./opt/zeppelin/bin/zeppelin-daemon.sh startThen go to Interpreter settings (on top right menu) and search for Spark. Make adjustment

- SPARK_HOME =

/opt/spark - PYTHON =

/home/dev/anaconda3/envs/test/bin/python - PYTHON_DRIVER =

/home/dev/anaconda3/envs/test/bin/python

Now you done and enjoy using Zeppelin in your ubuntu!