Many feedback about performance NVIDIA RTX after installing Stable Diffusion Automatic1111. I will explained a simple way to install and fix the RTX 4090 performance within 5 minutes

First, make sure you have Python 3.10 in your Windows. You can use Anaconda or native Python installation.

- Clone stable diffusion git repository to your local directory

https://github.com/AUTOMATIC1111/stable-diffusion-webui.git2. Install Stable Diffusion with xformers

This part is tricky. By default, it will install Torch 2.1.0, however the latest xformers will required to use torch 2.0. Which later you will encountered the problems like :

AssertionError: Torch is not able to use GPU; add --skip-torch-cuda-test to COMMANDLINE_ARGS variable to disable this check

The solution for installation both xformers and torch inside stable difussion is to pass the arguments in installation

./webui.bat --xformers3. Upgrade the CUDNN

For improving the speed, you can upgrade the CUDNN by download from NVIDIA https://developer.nvidia.com/rdp/cudnn-archive. Extract it and copy contents inside “bin” folder into

stable-diffusion-webui\venv\Lib\site-packages\torch\lib4. Install system info to check all versions

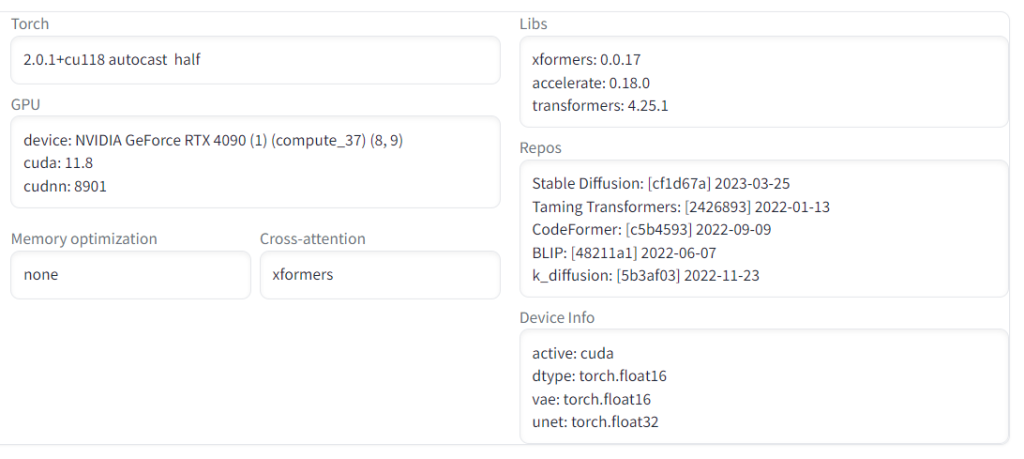

Go to Extensions -> Available -> Load from URL -> and search “system”. Install it and it will give you information about your Stable Diffusion installation

5. Start to generate images!

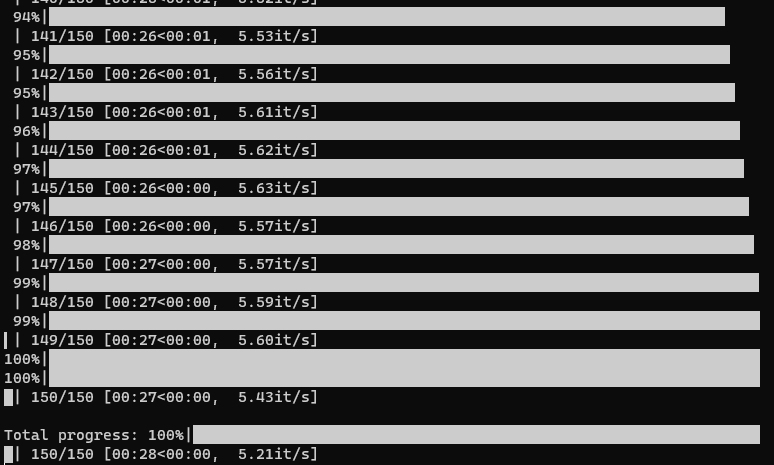

Run “Cat in a hat” with 150 sampling steps and 8 Batch-size. This can finished within 27-29 seconds.

6. Consideration to improve the speed

SD model sd2.1 is faster than SD v1-4 or v1-5.

xformers is like 25% faster.

A 5.8 GHz cpu is 50% faster than a 3 GHz processor which I have discovered 2 days back and have posted about separately.

cuDNN 5.7 is maybe 300% faster. Again it depends on if you were at 13 it/s before and on a 4090.

You can do the workaround and not get the 39.7 it/s I get. I do everything on a very fast Intel CPU with very fast memory with a native CPU build of pytorch, cuda 12(1%?), and opt-channelslast(2%?). All these things together helps.

The CPU speed of your processor(not the GPU) is the new datapoint that might lead to a complete understanding of this. I’m analyzing where the non-GPU CPU overhead is occurring in A1111 today.